Anthropic's Claude Code Security Rattles Cybersecurity Stocks — But What Does It Mean for Developers?

Anthropic's new AI-powered code scanning tool triggered a sharp sell-off in cybersecurity stocks, but its real significance may lie in how it reshapes the developer workflow around vulnerability detection and patching.

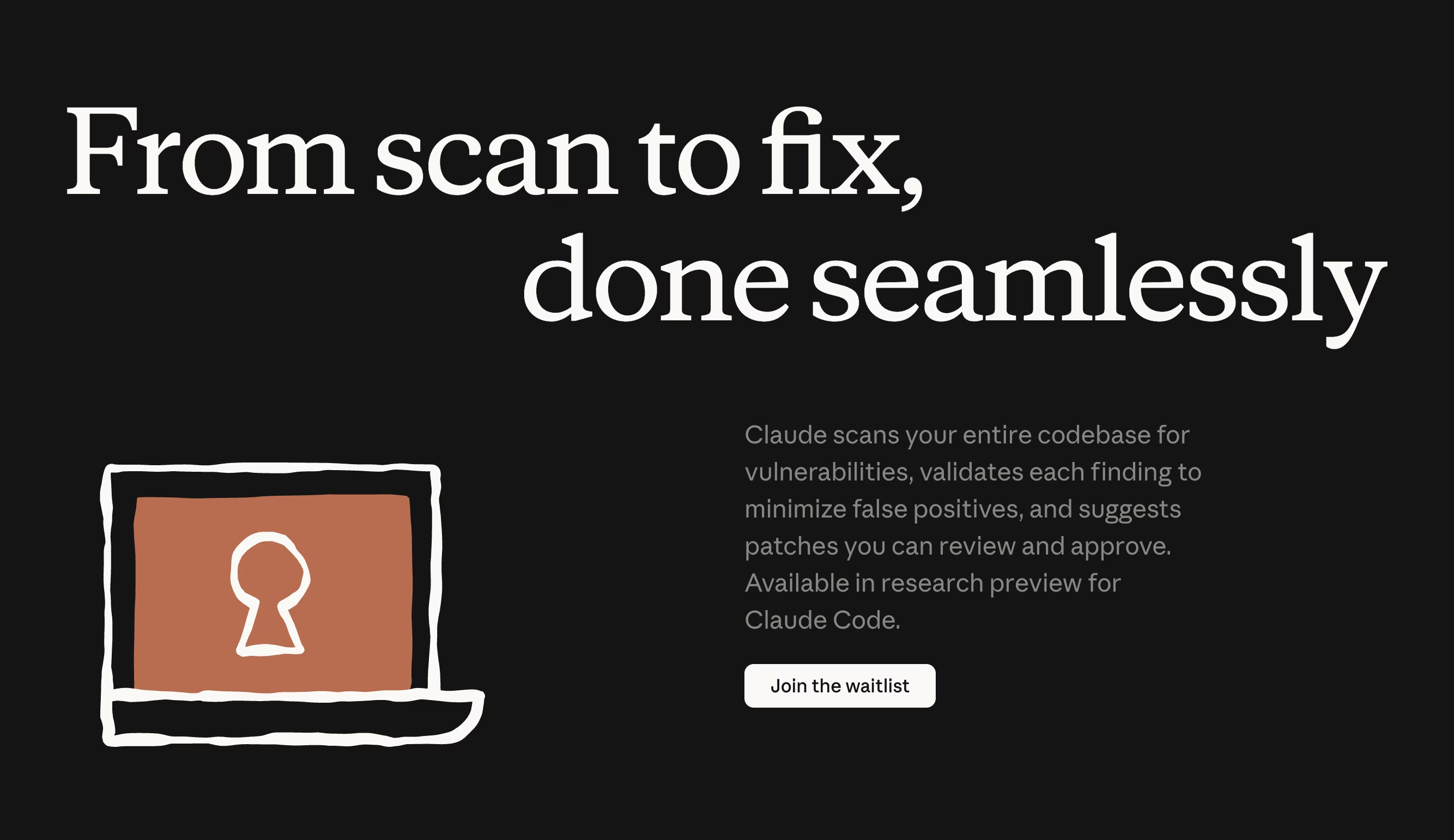

Image by Anthropic

A new AI-powered security tool from Anthropic sent shockwaves through cybersecurity markets on Friday, but the more consequential story may be what it signals for software development teams navigating an increasingly AI-native security landscape.

Anthropic's Claude Code Security, now available as a limited research preview, is a capability built into Claude Code on the web that autonomously scans codebases for security vulnerabilities and suggests targeted patches for human review. The company says it can catch subtle logic flaws that manual reviewers and traditional static analysis tools often miss.

"It scans codebases for security vulnerabilities and suggests targeted software patches for human review, allowing teams to find and fix security issues that traditional methods often miss," Anthropic said in its announcement.

The tool's unveiling hammered cybersecurity stocks. JFrog shares dropped roughly 25%, CrowdStrike fell around 8%, Okta slid over 9%, and GitLab lost more than 8%. Zscaler, Rubrik, Palo Alto Networks, Cloudflare, and SailPoint also saw significant declines. The Global X Cybersecurity ETF fell 4.9%, reaching its lowest level since November 2023.

What It Means for Development Teams

For developers, Claude Code Security represents a shift in how vulnerability detection could be integrated into their daily workflow. Rather than relying solely on periodic security audits or bolt-on scanning tools that run after code is written, this tool embeds AI-driven security analysis directly into the coding environment.

Anthropic noted that its underlying model, Claude Opus 4.6, has already identified over 500 vulnerabilities in production open-source codebases during internal stress testing. "Every finding goes through a multi-stage verification process before it reaches an analyst," the company stated, emphasizing efforts to minimize false positives — a persistent pain point for developers who often waste hours triaging alerts that turn out to be noise.

The tool is being offered first to Enterprise and Team customers, with expedited access for maintainers of open-source repositories. That prioritization suggests Anthropic sees open-source ecosystems — where under-resourced maintainers often struggle to keep up with security hygiene — as a key beneficiary.

Is the Market Overreacting?

Several analysts and commentators have argued the stock sell-off was disproportionate. Calcalist Tech noted that "Claude's new feature focuses on identifying potential weaknesses in source code during development, long before the software becomes operational," and pointed out that major cybersecurity firms like CrowdStrike and Cloudflare operate in fundamentally different domains — endpoint protection, DDoS mitigation, identity management — that a code-scanning tool does not address.

The publication drew an analogy: "Inventing a home smoke detector does not eliminate the need for a fire department."

Google has operated a similar internal tool based on Gemini for code vulnerability detection, yet still proceeded with its $32 billion acquisition of Israeli cloud security firm Wiz — suggesting that even companies with sophisticated AI-driven code scanning see vast remaining demand for traditional cybersecurity infrastructure.

The Bigger Picture

Still, the trajectory is clear. Anthropic predicted that "a significant share of the world's code will be scanned by AI in the near future." For developers, that means the security review loop is likely to get shorter and more automated. Teams that currently treat security as a late-stage gate may find themselves integrating AI-driven scanning much earlier in the development pipeline.

The tool does not replace security teams or cybersecurity platforms — at least not yet. But it does put pressure on existing static analysis vendors and may force traditional security tooling companies to accelerate their own AI integration. For developers working on large or legacy codebases, the promise of catching bugs "that had gone undetected for decades" is significant, provided the false positive rate stays low.

Anthropic emphasized that the tool keeps humans in the loop: patches are suggested, not automatically deployed. That design choice reflects both caution and a recognition that autonomous code changes remain a trust hurdle for most engineering organizations.

As AI development tools continue their rapid advance, the line between writing code and securing it is blurring fast.