Google's Gemini 3.1 Pro Arrives With Major Reasoning Gains — Here's What It Means for Developers

Google's latest model update more than doubles reasoning benchmark scores over its predecessor, shipping across AI Studio, Vertex AI, Gemini CLI, and the new Antigravity agentic platform.

Image by CWA

Google released Gemini 3.1 Pro on February 19, bringing what the company calls a significant leap in core reasoning to developers, enterprises, and consumers. The model is available in preview across a wide surface area of developer tools, signaling Google's push to make advanced AI reasoning a baseline capability for application builders.

A notable benchmark jump

The headline number: Gemini 3.1 Pro scored 77.1% on ARC-AGI-2, a benchmark that tests a model's ability to solve novel logic patterns. Google says that's "more than double the reasoning performance of 3 Pro," the previous generation released in November 2025.

The .1 version increment is itself a departure from Google's recent cadence. As 9to5Google noted, the past two Gemini generations used .5 as the mid-cycle update. The smaller increment may suggest Google is accelerating its release tempo as competition in frontier models intensifies.

Developer access points

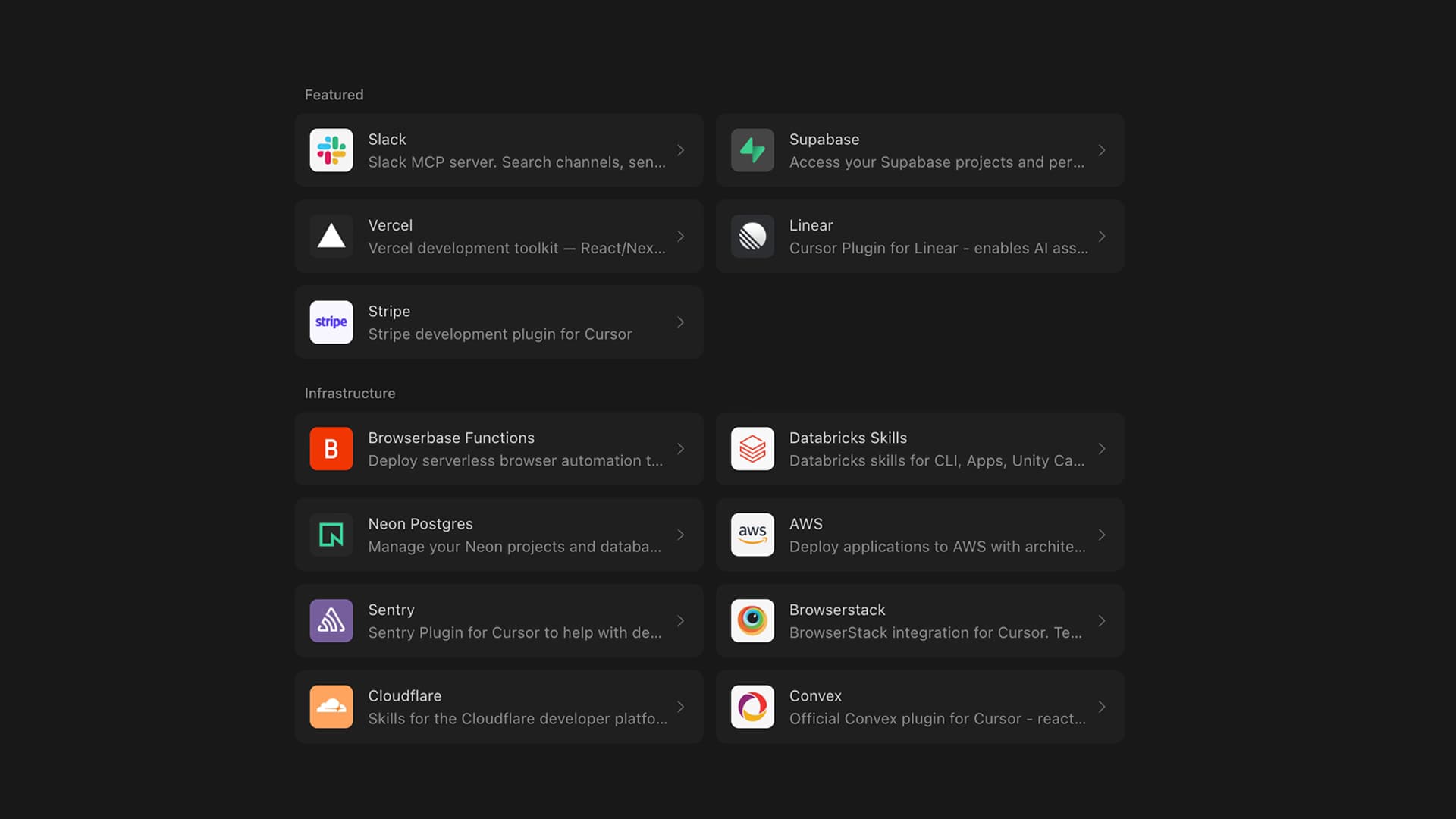

For developers, 3.1 Pro is rolling out in preview through several channels:

- Google AI Studio — the browser-based prompt playground

- Gemini CLI — command-line access

- Google Antigravity — Google's agentic development platform

- Android Studio — integrated into the mobile development IDE

- Vertex AI — for enterprise cloud deployments

The breadth of integration points matters. By embedding 3.1 Pro across AI Studio, Vertex AI, its CLI tool, and the relatively new Antigravity platform, Google is making it straightforward for developers to swap in the upgraded model without changing their toolchain.

Practical implications: what the reasoning upgrade enables

Google framed 3.1 Pro around tasks "where a simple answer isn't enough." The company showcased several developer-relevant demonstrations: generating website-ready animated SVGs from text prompts, building a live aerospace dashboard by configuring a public telemetry API, and coding a complex 3D simulation with hand-tracking and generative audio.

These examples point to a model that can handle multi-step code generation with real-world API integration — a meaningful upgrade for developers building agentic workflows or complex front-end prototypes.

"3.1 Pro is designed for tasks where a simple answer isn't enough, taking advanced reasoning and making it useful for your hardest challenges," the Gemini team wrote in the announcement.

The agentic angle

Google explicitly flagged agentic workflows as an area for continued improvement, noting the preview release is meant to "validate these updates and continue to make further advancements in areas such as ambitious agentic workflows before we make it generally available soon."

The mention of Antigravity — Google's agentic development platform — as a primary access point underscores this priority. Developers building autonomous AI agents that chain together multiple reasoning steps, API calls, and code execution stand to benefit most from the doubled reasoning scores.

What remains unclear

Google has not disclosed pricing changes for 3.1 Pro API access, nor has it published detailed comparisons against competitors like Anthropic's Claude or OpenAI's latest models on the same benchmarks. The model is launching in preview, with general availability timing described only as "soon." Latency and throughput characteristics compared to 3 Pro also remain unconfirmed.

The rapid iteration — from Gemini 3 Pro in November to 3 Deep Think last week to 3.1 Pro today — suggests Google is moving aggressively to close or maintain gaps with rival frontier models. For developers, the practical question is whether the benchmark gains translate to measurably better outputs in production applications. The preview period should provide those answers.